Jordan Fuqua

The Metaverse

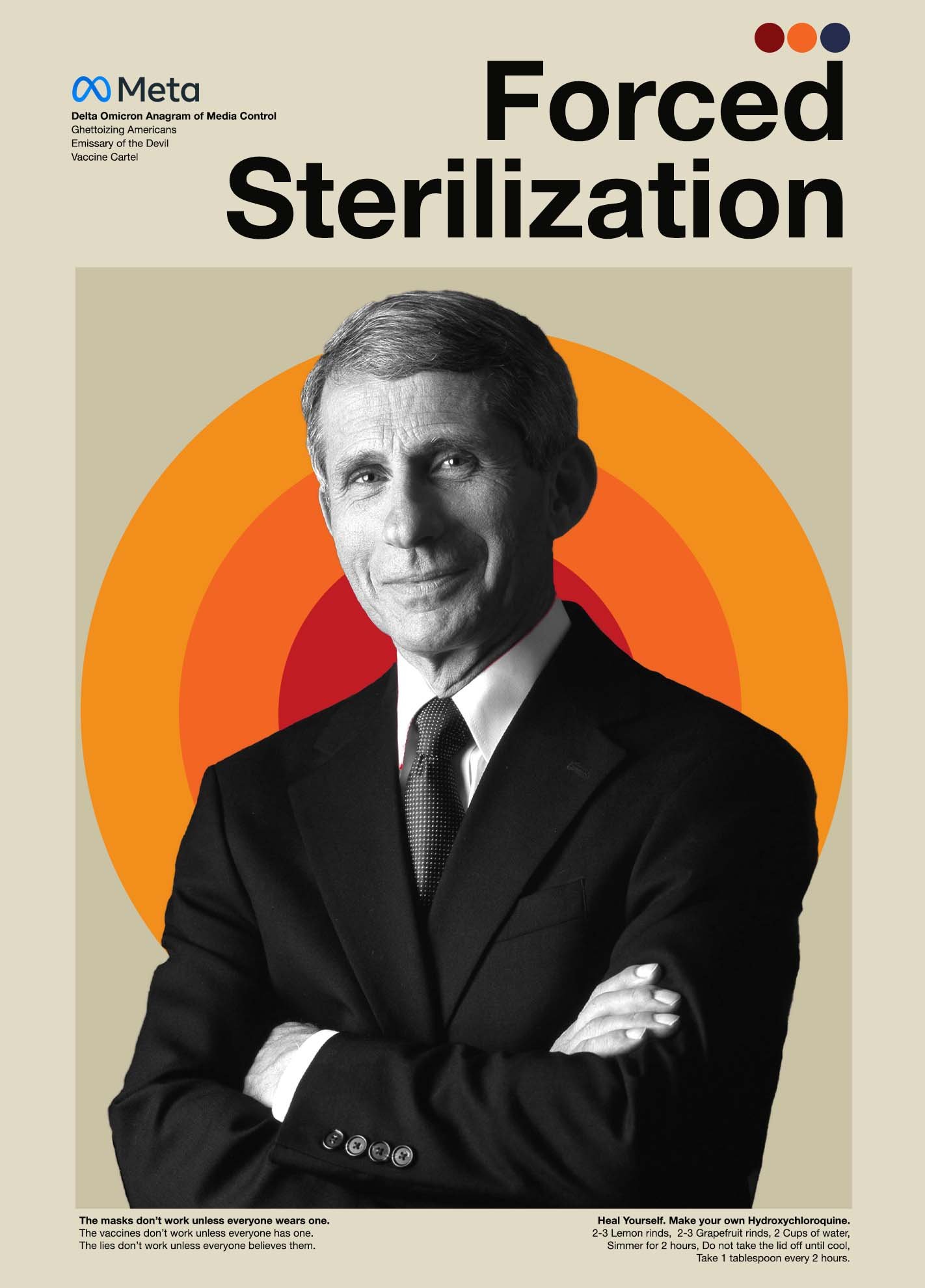

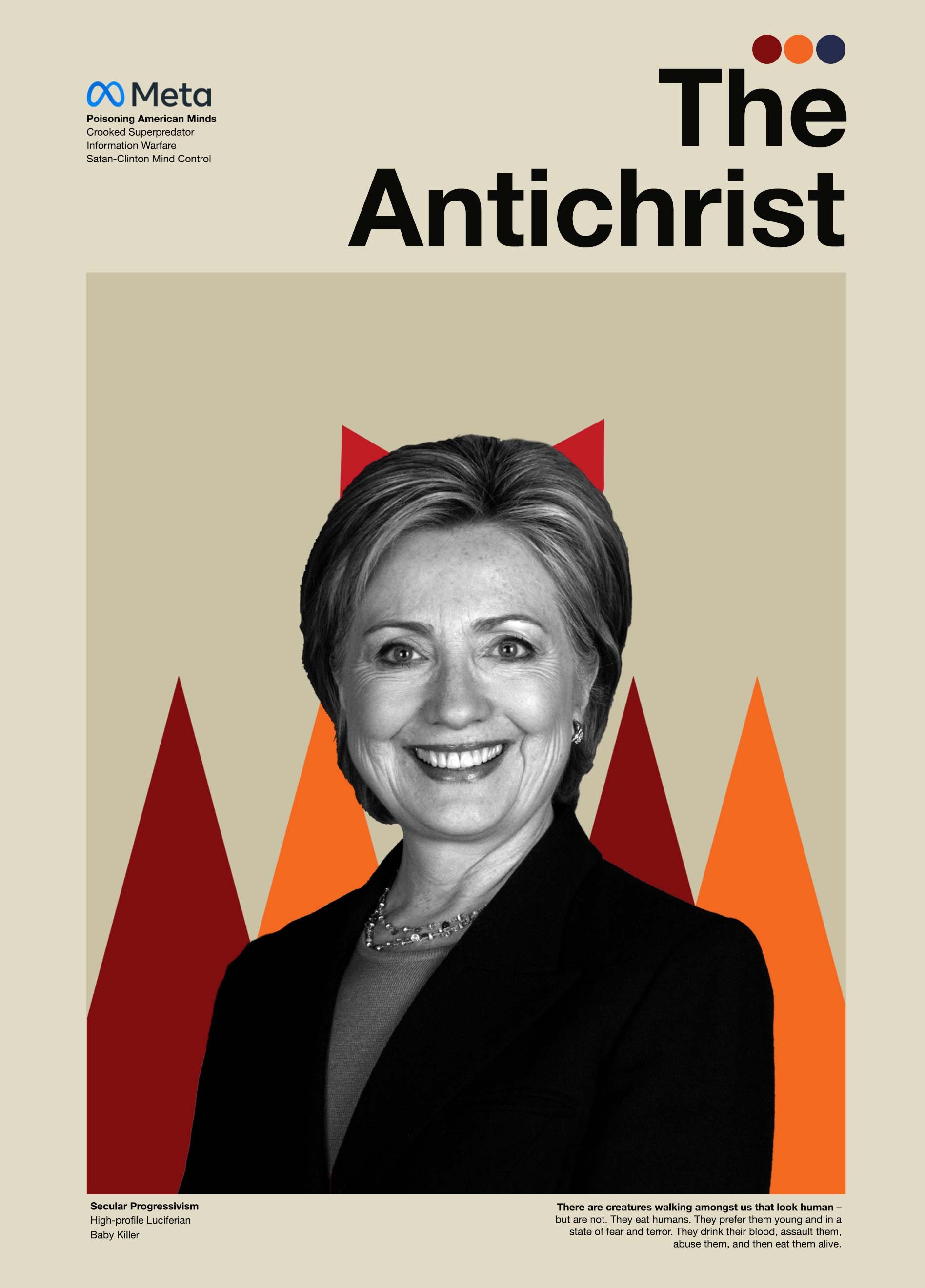

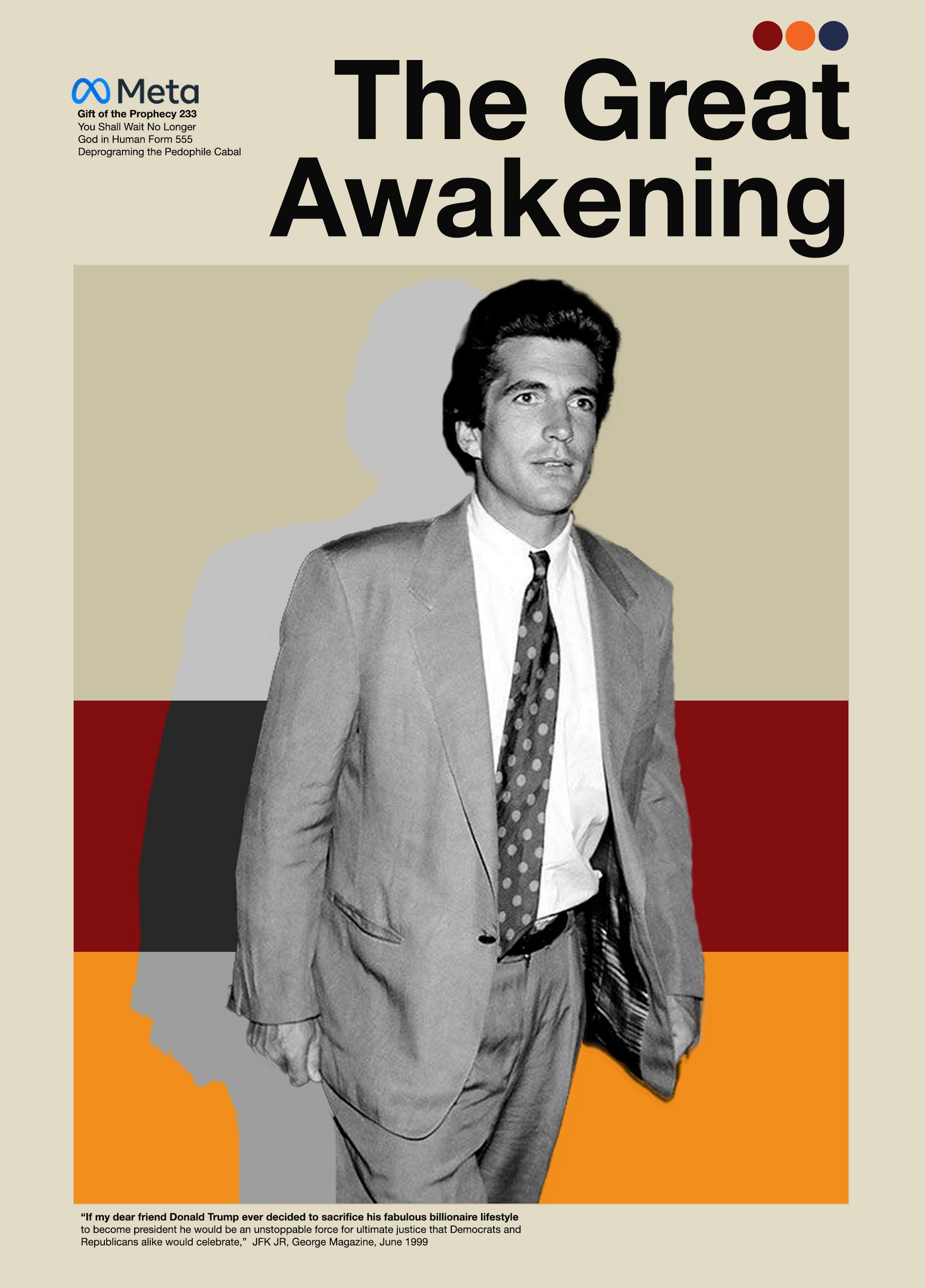

The ability to classify dangerous content easily is a critical feature of any social media site. All user created posts aren’t good. They can’t be. The idea was, at one point, to allow people who share a collective interest to connect online. Once Facebook realized its potential, keeping users on the platform longer by recommending tailored content became crucial to new economic incentives. It became a monetary instrument for learning human behavior. Its goal was to pinpoint data and sell it. But at some point, Facebook’s content can become so bad - that it encourages, recruits, and shares abuse. It traumatizes viewers who don’t ask to receive ISIS videos. It radicalizes users who are easily targeted by reactionary content. A growing body of research focused on the link between susceptibility to misinformation and broad “cognition,” patterns [1] found those that adopt gut-reaction thinking styles are likely to buy into conspiracies. [2] This problem is exacerbated online by harmful algorithmic design. Researchers found that new users living in Republican states were being recommended to join QAnon groups (a conspiracy about pedophile cannibal elites in America) within just 2 days of joining the platform [3]. By examining Facebook’s recommendation algorithm and how Americans are exposed to propaganda, we can begin to delegate responsibility and advocate for healthier algorithmic design. The Metaverse seeks to describe the way extremist content is being consumed by users, even subconsciously. Propaganda is being resewn into the fabric of American homes; QAnon conspiracies and COVID misinformation are the new American canon. Research conducted by Cybersecurity for Democracy found that among far-right sources, those known for spreading misinformation doubled their interactions compared to non-misinformation sources. When compared to far-left, far right content outperformed engagement by over six times the interactions. 4 Simply put: Americans are being exposed to extremist content and millions are susceptible to its persuasion. The time has come to demand a more honest content moderation policy and strive for healthier algorithmic design.

[1] Pennycook, G., Cannon, T.D. and Rand, “The Psychology of Fake News,” (2020).

[2] Stecula, Dominik A. “Social Media, Cognitive Reflection, and Conspiracy beliefs,” (2021).

[3] Zadrozny, Brandy. “What Facebook knew about how it radicalized users,” (2020) in NBC.

[4] Cybersecurity for Democracy, “Far Right News Sources on Facebook More Engaging,” (2020) in Medium.

Contact me

jordanfuqua1@gmail.com

(913) 626-0063

IG: Fuqua.design